The next AI battleground will be around datasets, not just functionality

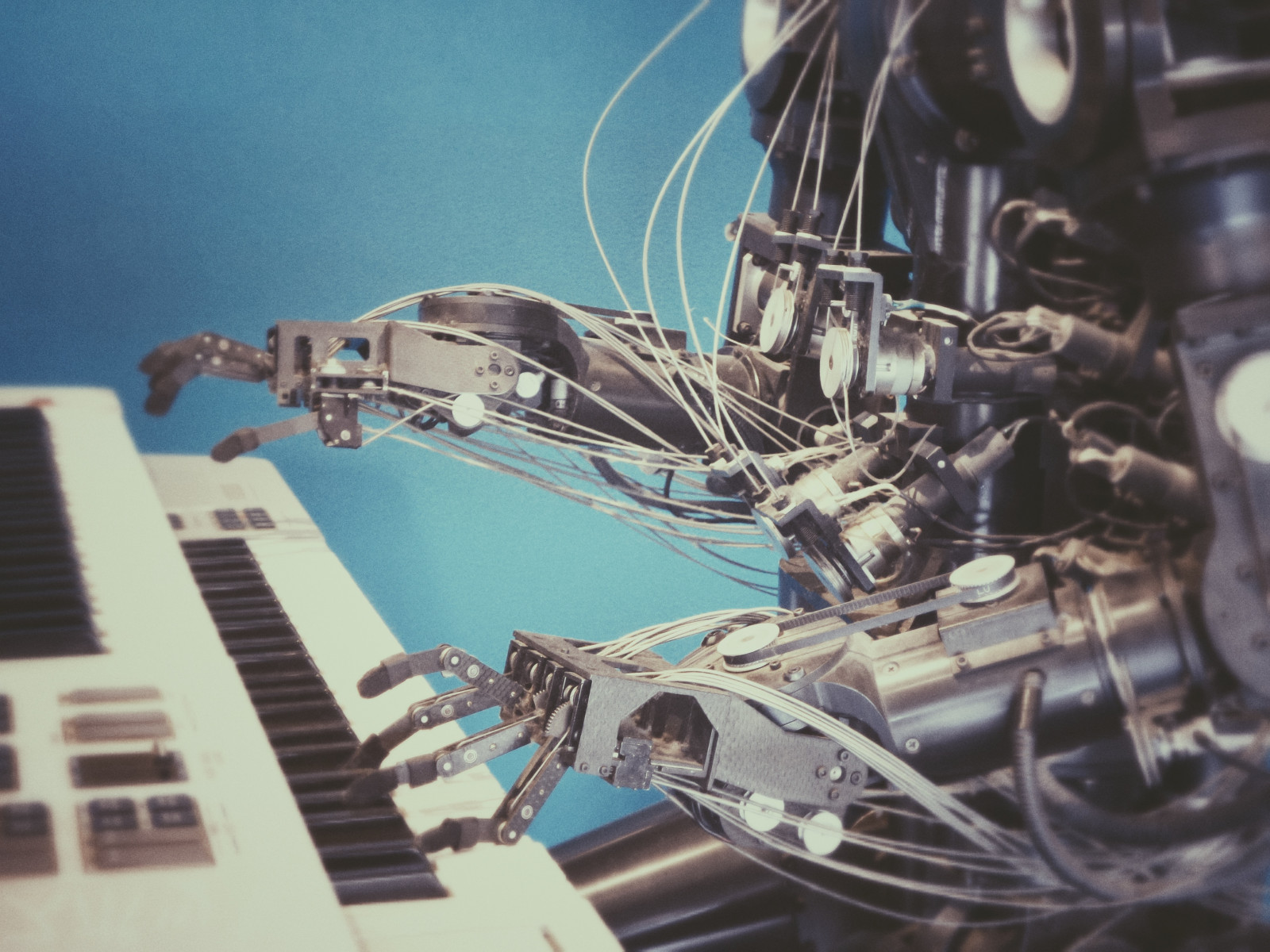

Photo: Possessed Photography

The conversation about AI has permeated every level of every industry, from architecture to music to politics. What it is, what it can do, and what it shouldbe allowed to do are all hot topics – and yet, the answers are perhaps more simplistic than might be expected from all the hype.

An open letter published by the Future of Life Institute (and signed by Elon Musk, as well as leading AI experts), has called for a hiatus on development of anything more powerful than GTP-4, citing concerns for societal wellbeing given continued growth without safeguards. The message could be ‘learn from our past mistakes’, but its delivery is more along the lines of ‘AI is scary, and we should stop’.

These concerns are shared by many experts throughout the field. However, this is slightly out of context with the broader AI marketplace.

AI can be divided into a number of functions. In many ways, it is simply a more flexible automation process, like Cortana or Amazon’s Alexa, which do simple tasks, like set reminders or turn on the lights (or order you things from the internet). Other programmes, like Salesforce Einstein, are simply advanced CRM services. Just like any of the algorithms or software programmes that have been released to market over the past two decades, they simply build on existing innovations with single(ish)-purpose solutions.

It is the machine learning and deep learning ‘bots’ (ML bots) of the likes of ChatGPT and others that are capturing the collective zeitgeist, however. And it is here where the fear of uninhibited development will come up against simple commercial and political constraints.

Featured Report

India market focus A fandom and AI-forward online population

Online Indian consumers are expected to be early movers. They are high entertainment consumers, AI enthusiasts, and high spenders – especially on fandom. This report explores a population that is an early adopter, format-agnostic, mobile-first audience, with huge growth potential.

Find out more…In short: any machine learning programme is only as good as the dataset it is trained on. So far, OpenAI and its ilk have been able to develop so quickly because they were made without thought to commercial viability. Because they were built with more of an academic mindset, they could simply train their bots on an internet-wide dataset of whatever they could get their hands on – which is both a pro and a con: see the early issues with Microsoft’s Sydney. The early popular success of DALL-E, Midjourney and co. were met with lawsuits and commercial uncertainty, as the rights attributions (never mind data privacy) underlying their output were murky at best, and in violation at worst. Italy has put a (perhaps) temporary ban on ChatGPT over privacy concerns, pending investigation, and may not be the last country to do so.

Meanwhile, in China – long the forerunner in AI technology – development of ML bots has been slower. This is due to a number of internal and political considerations; namely, the datasets on which the bots are trained are under far closer scrutiny than they are in the West; being carefully checked and tested for their content and performance before being released. Similarly, some Western AI companies are moving more slowly and carefully. In a briefing with MIDiA, Electric Sheep – a company focused on building an AI for rotoscoping in video – revealed that it is working with a ‘top-down’ approach, training its ML programme on rights-cleared, high-quality data first, making it commercially viable at the highest level, and later would work on broadening its product for more mainstream use. In short, you can start with a high-quality model and then build outwards, but if you start with a ‘messier’ dataset (be that in terms of rights clearances or anything else), there is no way to reliably clean it up later.

The proposed six-month moratorium on further development thus offers a simplistic response to an increasingly nuanced reality. The AI ‘arms race’ might turn into more of a tortoise and the hare situation – slower, more carefully built programmes will come out later, but will be fully commercially viable, privacy-safe, rights cleared, and without bugs, while the larger, faster-moving programmes will remaining at risk of lawsuits and political bans, and thus liable to being eclipsed.

Finally, it is worth remembering the hype cycle. As with any new technology, AI has full grasp of mainstream curiosity and enthusiasm. Ultimately, however, it will automate what can be automated, and in the wake of this, a majority of the economy and people’s normal lives will carry on largely unaffected – the hype will dip, as AI progresses into normalised usage. There is only so much that can be automated, most of which was largely ready for automation already, and there are no problems or threats that AI can pose that did not already exist (although it can exacerbate them). At the end of the day, AI is a tool; it is not so much a question of what it is, but how well it is built and how we choose to use it, that will determine its impacts. In this sense, its underlying datasets are the key to success or failure of any endeavours for which it might be utilised for.

The discussion around this post has not yet got started, be the first to add an opinion.